If there is one thing that gets people relatively annoyed is that their data is not available for a certain amount of time. You can’t get to your sales data, orders cannot be fulfilled, your tracking and tracing options for your goods on their way to your customers does not show up etc. That makes you really crunching your teeth whilst waiting for this stuff to come back. Take that feeling into your head and extrapolate it to the point you start to realize this will not return, ever…….got it? That is when data-corruption has made its mark.

Data corruption is of all ages. When the IBM folks in the 50-ies and 60-ies were developing and improving on their 5 tonne disk-drives one of their observations was that the state of the magnetic field of a coil sometimes could change due to external influences. One of them was the erroneous settling of the write-head above the cylinder resulting in (partly) wrong sectors being overwritten. When they sorted that out another observation was that the state of magnetic particles changed over time due to a different state of their neighbors resulting in a negative charge slowly changing to positive and vice-versa. Another problem is the deterioration of the material itself rendering it unable to receive a magnetic charge at all. When bits flip like this it is impossible to determine if a piece of data is good or bad.

The above shows a few examples on how things can go wrong when they are handled by or are already stored on a disk-drive. Numerous precautions have been introduced over time in the likes of read-after-write (checking if what is written to disk is actually readable again), scrubbing (checking if sectors are marginal) and if so move them to spare sectors reserved on the drive, using additional blocks for checksum markers, using additional drives to write parities (RAID) etc.

A similar thing happened when SAN’s got introduced. Obviously it was already known from the networking world that frames could get corrupted due to all sorts of problems. When Fibre-Channel was developed the maximum speed on normal networks was around 10Mb/s (ethernet) or 16Mb/s (Token-ring) and “fast-ethernet” 100Mb/s was not even released yet. The engineers working on FC specifications wanted to use 1Gb/s from the get-go and many of their industry colleagues were wondering if they were smokin’ something. They didn’t but given the fact the protocol was required to carry an enormous amount of data (remember this was end 80-ties beginning 90-ties) coupled with one or more channel protocols (SCSI/FCP, HIPPI, IPI, SBCCS/ESCON) it had to be extremely reliable. Having a disk-drive sitting on the other-end of a networked infrastructure had never been done before so all factors had to be taken into account to make it work from the start.

Networks are assembled based on one or more individual links and segments. SAN’s were no different. This meant that error prevention and verification methodoligy was required on at least two levels in the protocol stack on the lowest possible layers in order to be able to identify these errors as soon as possible. On the FC0 (hardware) layer the most stringent requirements were drawn up to remove the necessity of too many checks further up the stack. As electrical influences were significant in much of the equipment it was determined that a good DC-balance was paramount in order to be able to detect bit-flips on the lowest level possible. An old IBM royalty-free coding schema called 8b10b encoding/decoding provided the ability to add some sort of inline verification code which made sure that A: it could be done in hardware without external overhead and B: it also provide the requirement to obtain a good DC balance to eliminate capacitance factors of electrical circuits. (See other articles I wrote on this topic).

Having the individual links covered on only a byte level obviously did not resolve the end-to-end error detection and subsequent recovery operations. For that we needed to go one layer higher in the FC protocol stack (FC2 Framing and Signaling) where we could do some more nifty stuff in the way adding a CRC to an entire frame. By selecting the CRC32 mathematical equation the FC protocol was able to use a strong and well established method for error-detection but was also able to do this in hardware resulting in no overhead that would’ve been incurred when external processing options would’ve been chosen. From a performance point of view this was an obvious choice.

By now having an link and end-to-end error detection method there is no way that any bit-flips can result in data-corruption as both initiator HBA’s as well as storage array will simply discard any frame that has an invalid CRC checksum. Therefore an IO is not able to complete and the upper protocol layers (SCSI/FCP, IP, NVMe etc) have to make sure that any recovery operation is initiated from that level. The main reason for this is the shortage of available resources in switching technology but also to give the upper layers the ability to notify the operating systems and applications of any failed IO’s. If the networking layer would’ve been required to cater for any recovery operation not only would the amount of resources in the sense of memory, CPU power etc etc require an enormous boost but a secondary method would’ve been required to maintain OS and application coherency wit all outstanding IO’s. Latency timers and synchronization would also be required between the different parts of the storage IO stack. Besides the fact it is very difficult to do, it also means that the exponential cost increase of such solutions and the followup adoption rate in businesses would’ve fallen short in the first generation of products already.

As described above the chance of data getting corrupted in a storage network or on a storage array is NILL. Frames may be discarded or blocks on an array may become useless due to a phenomenon called bit-rot but in both cases the applications will not get incorrect data back.

This leaves a few other parts in the IO stack which could be the cause. The majority of errors related to data-corruption is in software bugs in file-systems, volume-managers, MPIO, SCSI-layer or HBA driver/firmware. Whenever there is something going wrong there the data is already corrupt before it hits the FC2 or FC1 layer and as such the payload will be packaged with a CRC32 of that data including the corruption. It then travels the same way as a non-corrupt payload and the data will simply be written to disk as is. Obviously when then read by an application it would yield an invalid value but the cause would not be the storage network or array.

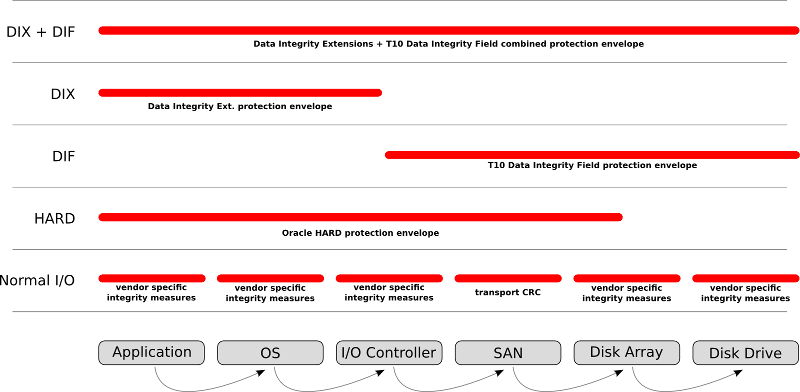

The way of preventing this is an end-to-end check from application to spindle verification method. For this the industry came up with a so called SCSI PI (Protection Information) DIF (Data Integrity Field)/DIX (Data Integrity Extensions) option. This entices the normal block size on an addressable sector to expand from 512 bytes to 520 bytes. The 8 bytes can then be used to add some sort of parity check-sum by the application in order to enable array to verify if what was actually send by the application is actually written to disk. Many arrays already did this and used these 8 bytes internally to check for internal integrity but this could not be expanded to the application before the SCSI extensions allowed this.

One of the best pictures I found was from Martin Peterson who captured the data integrity options in one view.

Since DIF/DIX is a SCSI layer implementation it does not touch on the SAN side itself. The fabric switches have no influence if these “parity-bits” are set or not. For FC it is just another frame which gets CRC check-summed and encoded/decoded on the L0 layer.

Not all vendors do support this feature though so if you require full end-to-end data verification from application to spindle have a chat with your (pre-)sales engineer to see what options are provided.

I hope this brings some clarity in the reasoning why and where data corruption can occur and optionally can prevent it. A rigourous maintenance doctrine on driver/firmware verification and updates across the entire IO stack will also help in preventing data-corruption plus lots of other problems.

As a last remark I cannot emphasize the need to an accurate and stringent back-up and recovery regime. If data corruption occurs due to wear and tear of media the only way to get things back is via a restore of a copy of that data or otherwise a very manual and labor-intensive process (if possible at all).

Regards,

Erwin