In the IT world a panic is most often related to a operating systems kernel to run out of resources or some unknown state where it cannot recover and stops. Depending on the configuration it might dump its memory contents to a file or a remote system. This allows developers to check this file and look for the reason why it happened.

A fibre-channel switch running Linux as OS is no different but the consequences can be far more severe.

In a case outlined as above where a FC switch panicked and restarted changes are very likely that attached hosts also have observed impact. Lost LUN’s results in path-failures and if no redundancy has been built you can rest assure applications will go down.

The panic on a technical term very often results pretty quickly in an organizational one where any form of impact is nervously been analyzed, systems administrators are watched by hawk-eyes and 5 minute updates are being demanded even though there may be nothing to report.

Normally the switch returns in its normal state as very often it simply bounced due to a resource problem like a memory leak or a non-restartable process died due to a bug. The term RCA (Root Cause Analysis) is then thrown around and the organisational panic grows to significant proportions. Unfortunatly I have to regret that an RCA is often not possible. If the switch was able to save a core-dump/memory-dump the Brocade FOS developers are able to figure out what went wrong.

In many occasions, especially when host or arrays are impacted due to FC traffic mishaps,an RCA is not possible simply due to the fact the diagnostic tools and features are not there as the running firmware is simply too old.

Back in 2011 I wrote an article called “Maintenance”. (see here) In there I outlined the same issues I keep seeing in my day-to-day job and I must regrettably acknowledge not much has happened in the 5 years since.

Appalling state of Storage Networks

On average the firmware of a Brocade or Cisco FC switch is over 2 years old. That basically means that an average SAN is exposed to approximately 400 defects and bugs that have been solved in subsequent releases. It becomes even more scary when you take into account that all new deployments are using firmware which have been flagged as GA or RGA (General Available / Recommended General Available) releases. This does result in the fact that older deployments statistically use even older code-levels therefore exponentially increasing the number of defects/bugs they can run into therefore linearly aligning the chance something goes wrong. And believe me when things can go wrong they go wrong with a bang.

As the title of this post already shows the overall state of storage network I see on a day-to-day basis is appalling. Link related events going back months, temperature sensor events showing issues with airflow, code-levels far out of date etc. etc.

Unfortunately a FC switch is too often seen as a “black-box” which is simply handled as a plug-and-play device. This is a huge misconception and this kind of equipment should be actively maintained in a similar, of even more rigorous, fashion as operating systems and applications. If one switch observes an issue it can, and most often will, impact your entire server install base.

I can’t stress enough the importance of actively maintaining a storage network. It will not only save you from downtime but will also provide a much better insight in what is actually happening in your storage environment. This allows not only for a significantly improved up-time ratio but in the event issues do arise, the added diagnostics capabilities do provide a much better insight into where things went wrong and what to do about it. The time-to-resolution is therefore significantly reduced once again improving your overall services.

Things to do

- Maintain code levels. Try to keep to RGA code-levels provided by your vendor as much as possible. Many bug fixes and code-improvements are made with every release and it really pays off running recent firmware.

- Introduce pro-active features which resolve issues before they spread across the fabrics. This includes port-blocking and error-threshold

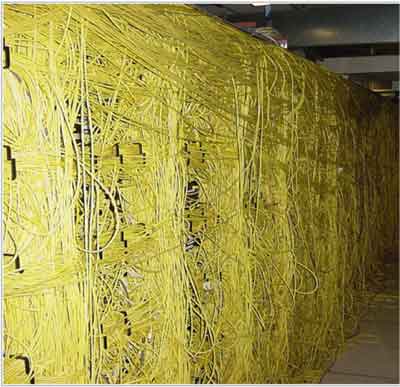

- Proper cabling. OM1 and OM2 cabling is not suitable for carrying 8G or 16G speeds traffic. Make sure that if you require these higher speeds the correct cables are used. This includes patch-panels and extenders.

- Cable hygiene. Make sure cabling is well looked after. This includes using dust-covers on cable ends and SFP’s as well as keeping bend-radius to an absolute minimum. Being able to play Led Zeppelin’s Moby Dick on a set of optical cables is not a good option.

- Keep track of events and action each of them. Especially Warning, Error and Critical events should be looked after right away and the cause of these should be fixed.

Obviously there are numerous other best-practises and many of these from a configuration perspective I’ve written down in my Brocade Best Practices Config Guide which you can find over here.