If there is one thing I would consider “the” blunder of FOS engineering it has to be Traffic Isolation zoning. I mean, creating such an administrative nightmare with obscure directions causing confusion and nasty pitfalls when one thing goes wrong is in many occasions a recipe for disaster.

Read on

The great thing about storage networking, and especially with Brocade, is you don’t have to know anything about it to get it working. Plug two switches together, add an HBA and array link, do some simple clickety-click on the array and voila: LUN’s… For any Windows and Unix admin a walk in the park. (And yes, I’ve seen hundreds of these. Maybe even thousands over the course of my career in this line of work…)

The danger about this approach is it’ll stop working fairly soon. So the elementary skill from a Storage Networking perspective in the form of zoning is pretty quickly learned as well as having a look at performance counters in the shape of either a CLI output or a monitoring tool. In many occasion the SAN will be expanded with additional switches to cope with growth and in a fairly short time-span you’ll see a mesh of links with traffic going left, right, up and down. This then leads to the question: “How do I make sure that traffic from HBA A goes to array port B over ISL link Z without impacting other traffic or being impacted itself by other traffic?” Up till FOS 5 you where out-a-luck. The routing schema was defined by FSPF (and still is btw.) and there was no option other than adding a dedicated, physically separated, set of switches. If you already had a pile of these switches you could potentially use Fibre Channel Routing (FCR) to have the ability to still use some other, shared, equipment.

This did often not fare well in RFI’s and RFP’s where a CFO had to sign off on an additional knapsack of money just for this purpose. Brocade did not have an immediate answer where Cisco could provide this traffic separation by using VSAN’s and ACL’s on their trunks. SAN-OS/NX-OS simply had/has the option to create two ISL’s (trunks) and allow VSAN traffic X to go over trunk A and VSAN Y over trunk B. That’s it. No strings attached (unless you needed to do IVR – Inter VSAN Routing – which require(d/s) an Enterprise license, for equipment sharing and that also came with a nice pricetag but that’s a different story.)

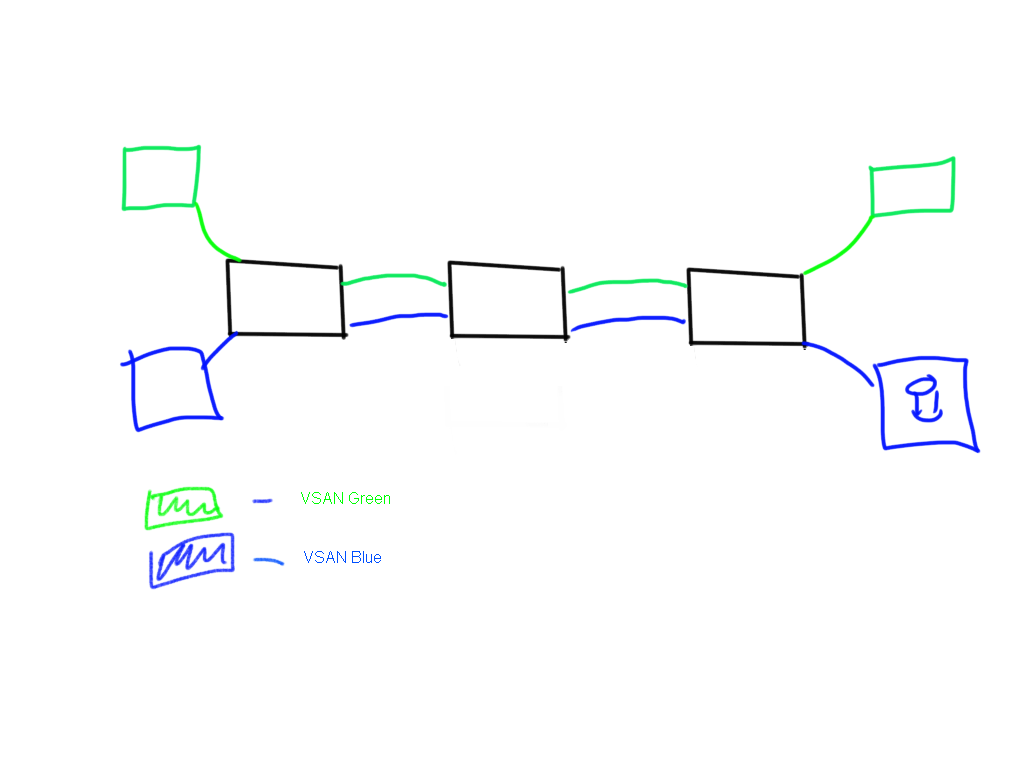

An example:

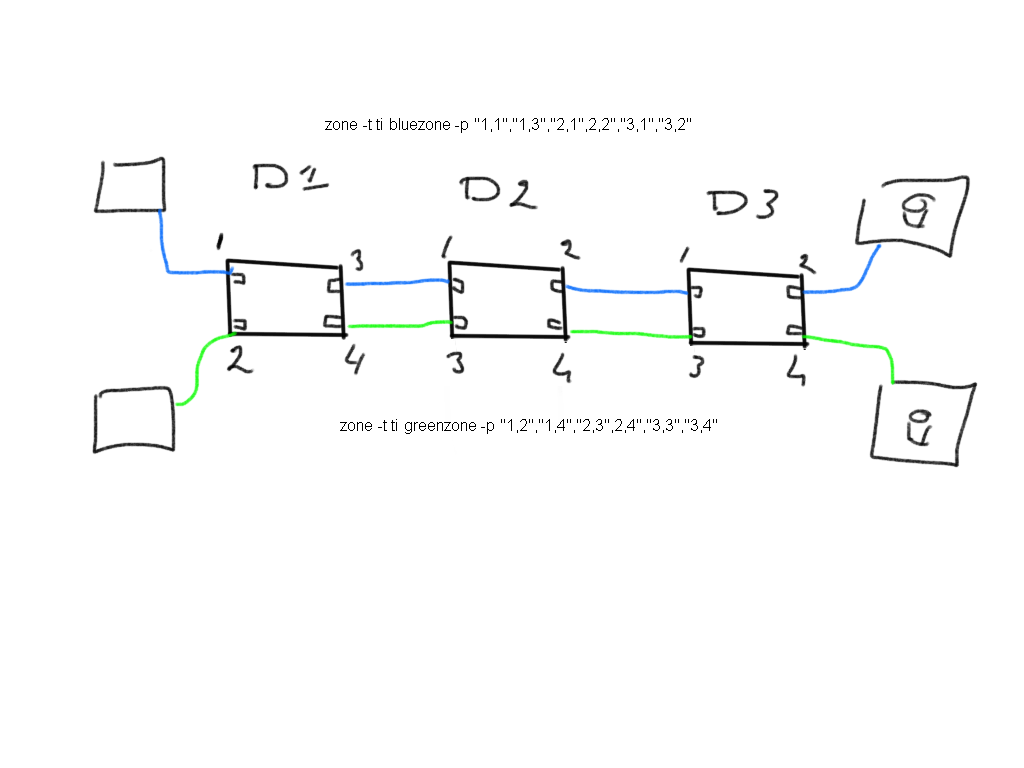

Behold a Cisco configuration:

The ISL’s (trunks) between the three switch were configured to carry traffic from each VSAN, blue and green. If you added devices you simply add a VSAN membership to them and the depicted trunks will simply carry the traffic specific for that VSAN.

The ISL’s (trunks) between the three switch were configured to carry traffic from each VSAN, blue and green. If you added devices you simply add a VSAN membership to them and the depicted trunks will simply carry the traffic specific for that VSAN.

If you wanted to to a similar thing on a Brocade environment you had to create so called Traffic Isolation zones. The name itself is already a misnomer as in effect it’s not really a zone but more a routing directive.

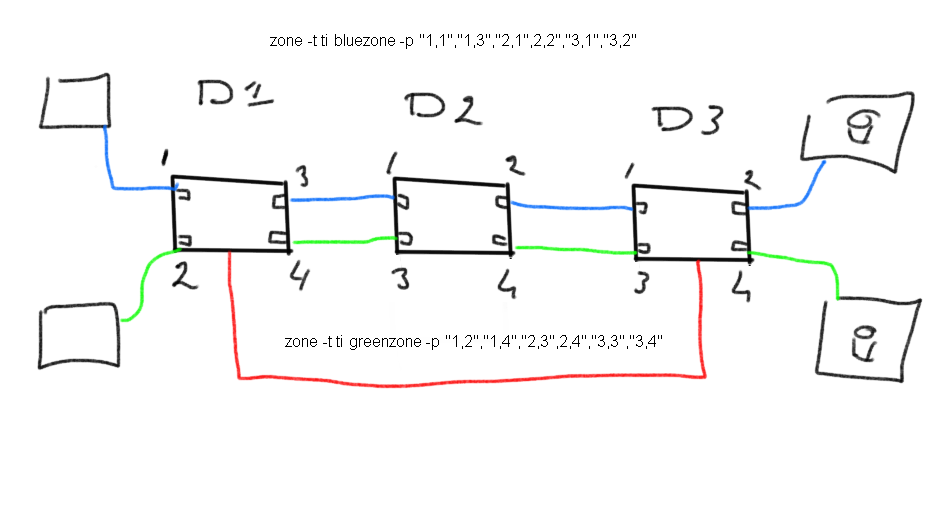

As you can see you have to specifically add each device-port and each individual ISL port to the “zone” in order to direct the ASIC to route the frame between specific ports. When adding new devices you have to create new “zone” each outlining a similar command.

As you can see you have to specifically add each device-port and each individual ISL port to the “zone” in order to direct the ASIC to route the frame between specific ports. When adding new devices you have to create new “zone” each outlining a similar command.

TI-Zone redundancy.

As with many things in networking there are some holy grails. One of them in storage networking is FSPF (fabric shortest path first). FSPF is the underlying mechanism how routes are set up in the fabric according to my fellow Dutchman Edsger Dijkstra. This algorithm is not to be taunted with and is superior to TI zoning adjustments. This basically means that if you create a TI zone as in the previous picture and later one decide to add an ISL between Domain 1 and Domain 3 the shortest path between 1 and 3 is direct and not via 2 anymore.

As a dedicated path is configured between the hosts and arrays to go via domain 2 this now conflicts with FSPF. As FSPF is prevalent over TI zoning all traffic is now directed over the red ISL.

As a dedicated path is configured between the hosts and arrays to go via domain 2 this now conflicts with FSPF. As FSPF is prevalent over TI zoning all traffic is now directed over the red ISL.

****** caveat ******* The above is true if you configured the TI zone with “Failover enabled” If you hadn’t done that basically all traffic simply stops and your hosts/applications come to a grinding halt. So even nothing is physically broken and valid active connections are all available a fairly simple mistake can lead to nasty consequences.

TI zone membership

In older FOS codes a port could only be a member of one TI zone. This meant that you had to sacrifice a fair amount of ports (including ISL’s) to have an X amount of paths in your fabric. As of FOS 6.x a port can be member of more TI zones.

The administrator nightmare.

If you think the above is complex you should try and apply this logic to fabrics where logical switches, XISL’s, local ISL’s and FCR fabrics are used. Given the fact Brocade has dedicated an entire (rather large) chapter in the FOS admin guide you can imagine there are a lot of things to look at before making TI zones a part of your infrastructure. Not only will you embark on a journey of complexity but you will put yourself in a straitjacket which will make changes in your fabric very time-consuming.

The result of the complex nature of TI zoning and its extensive ruleset is that you can compare the implementation with booby-trapping a maze. You better make sure you have extensively designed and documented the configuration otherwise one mistake can have severe impact on your entire environment.

My advice

- Stay away from it and explore other options first.

Remember that by creating TI zones you are directly interfering with routing/switching decisions. This also changes dynamics in traffic-flow where the existing routing algorithms to optimize and balance traffic over ISL’s no longer work to the extent of the TI-zone restrictions imposed by the configuration.

From an administrative standpoint creating virtual fabrics, logical switches, FCR routed topologies and basic/advanced zoning can cover the majority of needs when it comes to capacity, performance, device and traffic isolation as well as security.

Is it all that bad?

As I said in the introduction. I think TI zoning, seen from an engineering perspective, has been, and still is, a blunder of the highest order and a very quick and extremely dirty method of trying to implement a customer demand.

Given the fact a fairly simple concept of redirecting traffic from A to B over a certain path outside of existing routing algorithms, but still within the golden FSPF rules, by design created a feature that is complex to implement, difficult to maintain and extremely prone to human errors and software bugs.

I think the main reason Brocade uses this method is that the core of the switching code is based on the first transmission word of each FC frame (0) where the R_CTL and Destination ID (DID) (see here) is used for the ASIC to determine the route for that particular frame. Brocade has never used store-and-forward methodology and always used cut-though routing so immediately after the DID is known the ASIC is already forwarding the frame. Although extremely fast, it ultimately negates any option of “on-the-spot” switching decisions on a frame by frame basis.

Why it should change

In order to increase the flexibility of directing traffic flows I think the switching decision should be withheld until after the 3rd or 4th transmission word of the FC frame. It would have a huge range of additional information to its disposal to make more intelligent switching decisions. transmission word 1, 2 and 3 contain CS_CTL (which is already hijacked for QoS purposes), the frame type, frame control and df_ctl. Utilizing these fields provides a much simpler approach (from an administrative and operations perspective) to configuring traffic flows.

As an example I’ll take a configuration which is fairly common in somewhat larger customer environments where open systems and mainframe share the same storage network. These environments often utilize virtual fabrics where base switches and XISL’s are used to connect the two physical chassis’. Unless you create very complicated TI-zones which span multiple domains and fabrics there is no way to direct Ficon traffic over one XISL and SCSI traffic over the other. If however the switching logic would be able check on the Type field it would be a walk in the park to select traffic type 08 (SCSI), move that to XISL 1 and select traffic type 19 and 1A (SBCCS Channel and Control Unit) and move that to XISL 2.

Another example is where a SAN is managed by a service provider. Currently it is extremely hard to be able to direct traffic to a specific destination and differentiate between traffic generated by different “tenants”. If sources and/or destinations could be flagged/marked/tagged for each specific tenant its far easier to route this traffic over specific paths.

I’ve had discussions with customers, service personnel and field engineers and the majority had issues with TI zoning when they needed it.

If I was chief of FOS engineering I would have a serious discussion with my developers and the representatives in the T11 committee to somehow create these options.

Cheers

Erwin

i do agreee with Erwin on that. Better focus on correct routing configuration than think that Traffic isolation will really protect better our bandwidth.

We have been using SAN routing since year (2008) to interconnect our Datacenter through MAN link but we wanted to segregate the Tape and Disk traffic to different Path.

We start using Zone Traffic Isolation for EVA replication and we pushed Brocade to accept the Fabric Level Traffic Isolation for tape traffic as we had too many source and target (Lanfree agent to Tape devices) to do that zone by zone (even forced them to write it down in the Admin guide).

Now that we move to 7840 with a mix of FCIP and IP extension we will drop this very complex feature that was better for the sake of management than for real protection of our prod environment.