I recently was involved in a discussion around QD settings and vendor comparisons. It almost got to a point where the QD capabilities were inherently linked to the quality and capabilities of the arrays. Total and absolute nonsense of course. Let me make one thing clear “QUEUING IS BAD“. In the general sense that is. Nobody want to wait in line nor does an application.

Whenever an application is requesting data or is writing results of a certain process it will go down the I/O-stack. In the storage world there are multiple locations where such a data portion can get queued.When looking at the specifics from a host level the queue-depth is set on two levels.

(p.s. I use the terms device, port, array interchangeably but they all refer to the device receiving commands from hosts.)

1. The port driver. In windows this is the storport.sys driver

2. The adapter.

The IO stack

A basic overview of the windows IO stack looks a bit like this.

There are more bells and whistles involved like IO schedulers, virtual interface drivers, PNP drivers etc but that is beyond this post. (For a reference you may want to check out Werner Fishers Linux I/O diagram over here to see what I mean)

Why QD?

The QD setting on the port driver determines the number of outstanding active SCSI commands it will keep track of per LUN or virtual lun (in case of an MPIO device). The adapter has a parameter that controls the maximum amount of open exchanges (in the open systems world SCSI requests). If other technologies are being used (such as MPIO) you might even see additional queues. The QD setting is a host based parameter to control the total number of active requests (SCSI commands) to a device. The size of the queue is determined by the capabilities of that device. If a vendor mention a QD value of 500 it means that the device is able to sustain 500 concurrent active SCSI commands at any given point in time. This means that as long as the cumulative total number of commands coming from the hosts does not exceed that 500 at a single moment these requests will be handled normally. If request 501 comes in that one will either be discarded or a response with a “queue-full” status will be returned to the originator of the request.

The queue location

As I’ve shown above in the I/O stack depending where you set the QD values this will result in a different queue locations. If the QD value at the port-driver level is set higher then the HBA value it is the storport driver who will need to allocate memory out of the non-paged pool. This used to be a massive issue in the NT and Windows 2000 era since this pool was fairly limited. Excessive garbage collection and huge performance issues on CPU and high interrupt counts could be observed when this happened. When the QD values are set reversed where the HBA value is set higher than the port-driver the queue is then located and managed by the HBA itself which is always preferred. Obviously the hardware capabilities of the HBA determine the size of the queue based upon the number of targets and LUNs you have mapped on that HBA. Enterprise HBA’s like the Emulex LPe16000 or Qlogic 2×00 series show terminology like “Open Exchanges” or “Concurrent Tasks” or similar wording with numbers between 2048 and 8192. Lower priced HBA’s have much less memory and thus have less space to store data and thus have a lesser specification.

It is not necessarily bad for the queue location to sit on the host. Obviously when you have 20 hosts connected to a midrange array with only 4GB of cache to store incoming commands, the hosts often are better equipped with a lot more memory. Depending on the OS and version you may need to figure out what the non-paged pool size is however in general this should not lead to problems.

Resource allocation

Vendors do not stick a wet finger in the air and think of a nice number that might look impressive. Obviously you expect from a device to handle I/O’s. Each I/O request initiates certain actions on the array. Processor time needs to be reserved, threads are assigned, cache slots need to be allocated, parity engine needs to be notified and back-end offload processes need to be activated amongst many other things that accompany such request. You can imagine the additional processes involved when replication and/or snapshots are involved including long distance replication, journaling in case of reduced bandwidth etc etc.

The value vendors show need to take all this into account. As the QD setting on the hosts is set to be able to fill up the array controller all these resources will be used and in the end they may negatively impact each other from a performance perspective. The problem becomes even more problematic when one or more things go wrong and frames go missing between the initiator and target. The array needs to retain the reservations much longer since it relies on timeout values to instantiate recovery actions. This may lead up to 60 seconds of “IDLE” time (generic default scsi timeout value) before the array is able to release all these resources and is able to re-assign them to other IO requests.

Multi sequence task-set.

A write IO may be broken up in multiple requests in case the array resources are not sufficient enough to cater for the entire IO to be completed in one go. This is up to the discretion of the array since it knows what it can handle at that given point in time. In technical term the multiple requests IO this is called a task set. If the IO request is very large you will see in the write() command the LUN and LBA it want to write to together with the size of the request. The array then sends a XFER_RDY back indicating the acceptance of the command and the, so called, “Burst Length” (BL) which is the number of blocks the HBA is allowed to send. If the BL is equal to the size in the write() command, all data will be send from the initiator to the array. If the array does not have enough resources at that time it will adjust the BL in the XFER_RDY to whatever it is able to receive at that time and the IO is thus split into multiple chunks/sequences.

Performance

As shown above the maximum number of concurrent I/O requests are proportional to the resource capabilities of the array. Depending on the design of the array these values are limited per target port, port blocks, controller or entire array so it’s difficult to show a generic example. You really need to know the architecture of the array and design your LUN mappings and QD values from there. Taking into account the asynchronous SCSI behaviour you can see that on large write I/O’s there may be delays on multiple layers of the stack.

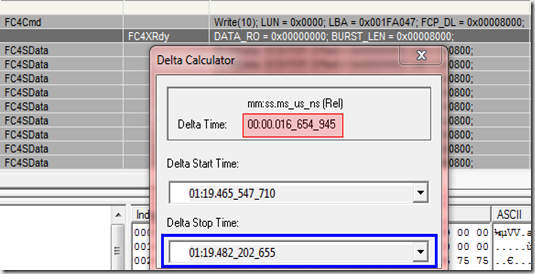

If an array is busy you may see a delay in response time on the XFER_RDY. As seen in the fibre-channel trace snippet below the device send an XFER_RDY back after 16ms of receiving the write(10) command. Not even a single chunk of data has been sent here.

Although the device may be busy this doesn’t show a queue-depth issue per-se but it might be that too many resources have already been allocated for this request to complete. An incorrect QD setting may be the reason. Depending on the returned status message you might be able to determine if timeouts are related to QD or just busy arrays. If the array is unable to accept a new command due to a queuing condition the array will send back a SCSI status of 0x28 (Task Set Full). The host should try the command at a later time. If the array (or port) does not have any open SCSI commands active but is still unable to process the command it will (or shall) return a 0x08 (Busy) status.

Although the device may be busy this doesn’t show a queue-depth issue per-se but it might be that too many resources have already been allocated for this request to complete. An incorrect QD setting may be the reason. Depending on the returned status message you might be able to determine if timeouts are related to QD or just busy arrays. If the array is unable to accept a new command due to a queuing condition the array will send back a SCSI status of 0x28 (Task Set Full). The host should try the command at a later time. If the array (or port) does not have any open SCSI commands active but is still unable to process the command it will (or shall) return a 0x08 (Busy) status.

The challenge is to find the “sweet-spot” between the capabilities of the array and the QD values you can use. A value too low may waste available resources whereas a too high value may result in unwanted queue-full conditions.

Error conditions

You can imagine that when a host has set a QD value of 128 and it is fully utilising that capability the effect of one or more errors is inversely proportional to the resources the array has to do in case of error conditions. It will need to notify the host with a 0x02 Check Condition and the host will need to inquire about the status. In that time (the array will need to retain the status of that I/O request (or task) until the HBA comes back with a respond. In that time it will sit in a so called ACA (Auto Contingent Allegiance) where it might not be able to serve new incoming requests. (That depends on some other factors.) That respond is based upon the implementation of the OS and what its capabilities are. On disk devices you will most likely see an abort or reset. On arrays it will need to undo all the resource allocations and other house-keeping actions that were setup during the command phase. Obviously this will take the same (if not more) resources as the initial request.

Shared resources

Depending on Fan-In/Fan-out ratio and the number of LUNs presented to the respective initiators the chance of oversubscribing the tasks-queue can be severe especially when no planning is done upfront.

I hope this explains a bit why managing QD on a host is so important. You are working with shared resources which, if configured incorrectly, can have an severe negative impact on overall performance and availability.

One last remark

Only in extremely rare corner-cases (the ones which have a very poor host configuration from a resource perspective) have a low-queue depth value ever led to performance problems. Lowering the QD value in almost all scenario’s IMPROVES the overall performance of the entire IO stack.

Regards,

Erwin van Londen