Historically the need to segregate fibre-channel traffic and have the option to prioritize frames and flows has not been high on design agenda of most of the companies I had under my eyes. Most often if the need is there to differentiate between different levels of importance between the various business applications you’ll very often see that additional equipment is purchased and the topologies are adjusted as needed. This obviously works well however when the ratio of capex vs opex is out of balance but the business is still retaining the need for your applications to be separated in order of criticality, you need to consider other options. As in the IP networking world Fibre-Channel has a similar functionality which has been in the FC standards for a long time but only recently has been introduced by some vendors.

Brocade has had the QoS functionality in their FOS code for a long time. This was however limited to certain flows between an HBA and array port. The functionality was achieved by using, so called, QOS zones. Based on a specific zone name the traffic flow can be directed onto certain virtual channels on the ISL’s.

Brocade has had the QoS functionality in their FOS code for a long time. This was however limited to certain flows between an HBA and array port. The functionality was achieved by using, so called, QOS zones. Based on a specific zone name the traffic flow can be directed onto certain virtual channels on the ISL’s.

Virtual Channels

Ever since Fibre Channel Class 3 became the dominant service class for SCSI (FCP) and other classes where, more or less, abolished, numerous features and functions that were developed for these other classes have been happily hijacked by numerous vendors to provide functionality that did originally not exists for class 3. Virtual Channels was one of them, originally a class 4 feature.

Virtual Channels have been used by Brocade for a long time. They provide the functionality to carve up ISL’s into multiple virtual tracks which provides the functionality of mapping certain frames on these virtual channels in order to circumvent (in a limited fashion) Head-of-Line blocking and also being able to provide a “QoS” service based on the bandwidth if configured as such. The implementation Brocade uses is that each ISL is configured with at least 8 virtual channels by default unless you manually configure an ISL to just use a single channel via the “portcfgislmode” command.

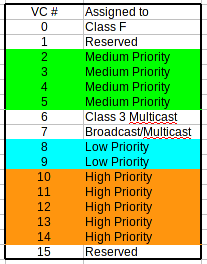

These channels are:

0. Fabric services – These always have the highest priority and will pre-empt data-frames.

1. Reserved

2-5 Data

6. Multicast

7. Broadcast

I won’t go into VC’s 1,6 and 7 here.

When the QoS feature is enabled on an ISL it will provide additional Virtual Channels from 8 to 14 whereby 8 & 9 are flagged for Low Priority and 10 to 14 for High Priority traffic.

The end picture will then look something like this:

The mapping of these virtual channels is based upon a configuration of the zone name prefix. These have three predefined values “QOSH_”,”QOSM_” and “QOSL_”. So if a set of initiator and target is configured in a zone called “QOSM_prodserver1” it will traverse any of the VC 2 to 5. (Actual mapping of which VC to use is based upon the FCID of the destination address.) If, however, these are configured with a “QOSH_prodserver1” zone name they will use one of the VC’s 10 to 14. (The mapping of the LOW and HIGH VC’s is not based upon the FCID of the destination address but it calculated based upon a Brocade proprietary algorithm which I am not entitled to disclose.)

If you are in a situation where you want to use QOS and you want to manually set a certain set of HBA/target onto a specific virtual channel in that group you can append the VC number to the QOS prefix such as “QOSH2_prodserver1“. This causes ALL frames of that particular source and destination to specifically use VC11 in that QOSH group. The same this is true for QOSL_. You cannot map traffic to a specific VC in the QOSM group.

If you do NOT specifically configure a QOS zone and a zone name is thus not prefixed with QOS[H-L] it will automatically use a VC of the QOSM group (2-5).

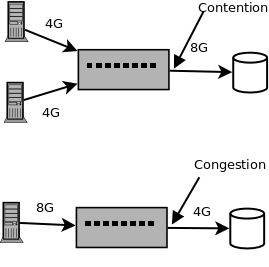

There is a misconception that QOS is always in effect however that is not the case. The QOS algorithm only kicks into action when there is contention on that particular ISL. Contention can be caused by either congestion (ie more traffic is directed over that ISL then the bandwidth provides) or when multiple frames arrive at the switch at the same time which are destined to traverse that ISL

Schematically you would represent the difference as follows:

Obviously if you have multiple ingress flows causing congestion it will also result into contentions at some stage resulting in QOS kicking in.

Based on the QOS zone mapping and if contention occurs the priorities of the frames being sent over the ISL is set to a 60%/30%/10% ratio. This means that if there are frames arriving at the same time in the switch which are mapped into three different QOS zone they will be send in such a way that the frames from a QOSH zone will get 6 frames over the ISL before the QOSM zone will get a 4 frames and QOSL will get 1 frame after which QOSH will get another 6 frames etc. Now, you can see a somewhat flaw in the algorithm here. The QoS mechanism does not take into account the framesize. It could well be that frames from a QOSL zone have a full 2K payload and frames from a QOSH only have very small frames of, lets say, 128 bytes. This is the reason why even in a fully utilized ISL the performance figures may only show a 70% to 80% usage. This has raised questions in some cases I handled.

Even though this functionality is fully arranged by the fabric, therefore is easy to set up and maintain there is a big hurdle here. You cannot differentiate between certain parts of the traffic of the initator to target pairs.

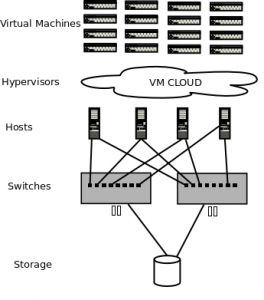

Lets assume you have a virtual environment looking like this:

Assuming you have multiple levels of VM’s regarding criticality it becomes fairly difficult to create and maintain a zoning configuration based upon these levels. Obviously you can fool around with features like SDRS in VMware however this does not provide you with the ability to schedule the equivalent priority mapping all the way through the fabric and storage. If there is contention in the fabric and one or more VM’s are competing for IO’s and pushing frames, its the fabric that decides which frame goes first based on a best effort basis if these source and destination pairs sit in the same QOS level. Even though you may have multiple QOS zone working, how do you differentiate between the different levels of IO priority. Again there are a few things you can do on a host level however it is impossible to provide end-to-end QOS all the way to LUN level which is where, in the end, the data will reside. Things will become even more complex when you introduce multiple levels of tiering inside the storage infrastructure like the below figure depicts:

Assuming you have multiple levels of VM’s regarding criticality it becomes fairly difficult to create and maintain a zoning configuration based upon these levels. Obviously you can fool around with features like SDRS in VMware however this does not provide you with the ability to schedule the equivalent priority mapping all the way through the fabric and storage. If there is contention in the fabric and one or more VM’s are competing for IO’s and pushing frames, its the fabric that decides which frame goes first based on a best effort basis if these source and destination pairs sit in the same QOS level. Even though you may have multiple QOS zone working, how do you differentiate between the different levels of IO priority. Again there are a few things you can do on a host level however it is impossible to provide end-to-end QOS all the way to LUN level which is where, in the end, the data will reside. Things will become even more complex when you introduce multiple levels of tiering inside the storage infrastructure like the below figure depicts:

This figure shows inside the top storage array there are three levels of tiering whereby the red can be flash based, the green can be SAS based and the blue can be SATA based drives. To add to this you may even see externally attached arrays with some sort of archive tier. Given the fact these tiers are not static and based upon the workload profile an array may decides to upgrade/downgrade pages to certain tiers there is no way to tell from a host perspective whats going on on these levels nor can it be determined what to expect from certain LBA’s (Logical Block Addresses) over time. A response time of 0.8 ms on a LUN may be 5 ms a few minutes later. To be able to get a grip on that and have a more granular control plus a better predictability of IO responses and priorities we need to be able to set priorities on a per-frame basis. Hence let me introduce “CS_CTL“.

This figure shows inside the top storage array there are three levels of tiering whereby the red can be flash based, the green can be SAS based and the blue can be SATA based drives. To add to this you may even see externally attached arrays with some sort of archive tier. Given the fact these tiers are not static and based upon the workload profile an array may decides to upgrade/downgrade pages to certain tiers there is no way to tell from a host perspective whats going on on these levels nor can it be determined what to expect from certain LBA’s (Logical Block Addresses) over time. A response time of 0.8 ms on a LUN may be 5 ms a few minutes later. To be able to get a grip on that and have a more granular control plus a better predictability of IO responses and priorities we need to be able to set priorities on a per-frame basis. Hence let me introduce “CS_CTL“.

CS_CTL

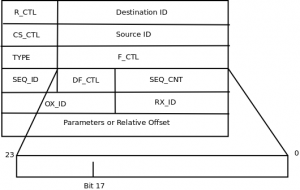

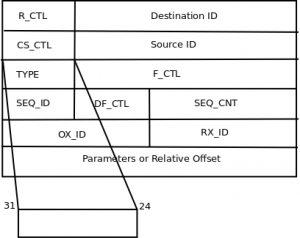

The acronym stands for Class Specific Control and was initially introduced to be able to control Class 1 connection oriented priorities and preemption. Later on in the development phase of FC this field was extended to be used in other classes as well. Since these days almost everything run on class 3 I’ll focus on that class only.

The CS_CTL field is an 8-bit field located in the first octet of the second word of the FC-frame header. This allows the ASICs to determine pretty early to determine what to do with the frame.

There are two options:

- Delivery preference.

- Priority and Preemption

Which of these policies are in effect is determined by two bit flags.

- Bit 17 of the F-CTL field

- Bit 31 of the CS_CTL field.

When Bit 17 of the F-CTL field set to 0 and bit 31 of the CS_CTL set to 1 the delivery preference policy is activated. With delivery preference FC uses a DSCP (see RFC-2597 and RFC-2598) value in bits 29 to 24 of the CS_CTL field to determine the policies accompanied by those values.

When Bit 17 is set to 1 FC will switch to Priority and Preemption. (which is used when enabling this in a Brocade fabric.) In the CS_CTL field a value will be set in bits 31 to 25 which determines the priority of that particular frame. Bit 24 was used to preempt and remove and existing class 1 connection if a class 3 frame has a higher priority. In today’s storage environments you will not see this anymore.

The Brocade implementation

Brocade allows for two methods of enabling QoS on CS_CTL values.

- Default mode

- Auto mode

When configured for “Default mode” the initiator (HBA) and target (array port or tape device) need to set a value on the CS_CTL field. The values in this field then determine which QoS group and VC this frame will be mapped.

- QoS Low – values 1 to 8

- QoS Medium – values 9 to 16

- QoS High – values 17 to 24

When enabling this on a chassis you use the “configurechassis” command.

Switch_1-LS128:FID128:admin> configurechassis

Configure…

cfgload attributes (yes, y, no, n): [no]

Custom attributes (yes, y, no, n): [no]

system attributes (yes, y, no, n): [no]

fos attributes (yes, y, no, n): [no] yCSCTL QoS Mode (0 = default; 1 = auto mode): (0..1) [0]

If the mode is set to “Auto” there are only three values that an HBA or Array can need to be set by the array or HBA.

- QoS Low – 1

- QoS Medium – 2

- QoS High – 3

The switch will then automatically map the frames onto the right priority group and VC.

Support

Obviously when you want to use this feature it needs to be supported by the end device (HBA and target) plus you will need to enable it on the switches. This can be accomplished by enabling CS_CTL per port.

portcfgqos –enable <slot>/<port> csctl_mode

The CS_CTL feature takes precedence over zone-based QoS setting so if you configure a QOSL or QOSH zone and incorporate a port which has CS_CTL enabled this will not have any effect.

Switch_1-LS128:FID128:admin> portcfgshow 11

Area Number: 11

Octet Speed Combo: 1(16G|8G|4G|2G)

Speed Level: AUTO(SW)

AL_PA Offset 13: OFF

Trunk Port ON

Long Distance OFF

VC Link Init OFF

Locked L_Port OFF

Locked G_Port OFF

Disabled E_Port OFF

Locked E_Port OFF

ISL R_RDY Mode OFF

RSCN Suppressed OFF

Persistent Disable OFF

LOS TOV enable OFF

NPIV capability ON

QOS Port AE

Port Auto Disable: OFF

Rate Limit OFF

EX Port OFF

Mirror Port OFF

SIM Port OFF

Credit Recovery ON

F_Port Buffers OFF

E_Port Credits OFF

Fault Delay: 0(R_A_TOV)

NPIV PP Limit: 126

NPIV FLOGI Logout: OFF

CSCTL mode: OFF

D-Port mode: OFF

D-Port over DWDM: OFF

Compression: OFF

Encryption: OFF

FEC: ON

FEC via TTS: OFF

Non-DFE: OFF

After enabling this:

Switch_1-LS128:FID128:admin> portcfgqos –enable 11 csctl_mode

Enabling CSCTL mode flows causes QoS zone flows to lose priority on such ports.Do you want to proceed?(y/n):y

Switch_1-LS128:FID128:admin> portcfgshow 11

Area Number: 11

Octet Speed Combo: 1(16G|8G|4G|2G)

Speed Level: AUTO(SW)

AL_PA Offset 13: OFF

Trunk Port ON

Long Distance OFF

VC Link Init OFF

Locked L_Port OFF

Locked G_Port OFF

Disabled E_Port OFF

Locked E_Port OFF

ISL R_RDY Mode OFF

RSCN Suppressed OFF

Persistent Disable OFF

LOS TOV enable OFF

NPIV capability ON

QOS Port ON

Port Auto Disable: OFF

Rate Limit OFF

EX Port OFF

Mirror Port OFF

SIM Port OFF

Credit Recovery ON

F_Port Buffers OFF

E_Port Credits OFF

Fault Delay: 0(R_A_TOV)

NPIV PP Limit: 126

NPIV FLOGI Logout: OFF

CSCTL mode: ON

D-Port mode: OFF

D-Port over DWDM: OFF

Compression: OFF

Encryption: OFF

FEC: ON

FEC via TTS: OFF

Non-DFE: OFF

As you can see the QOS value changes from “AE” to “ON” as well. This is all you need to configure on a switch level.

Device support

The previous paragraphs described the exciting stuff. Here’s the slam in the face. As far as I know there currently is only one (yes ONE) vendor who supports the feature on an HBA. Emulex calls this “ExpressLane”. I haven’t been able to obtain roadmaps from other (storage) vendors but as far as I know there is none that currently supports this. I would certainly urge you start asking/demanding your vendor representatives for this because this can really be a game-changer in storage environments.

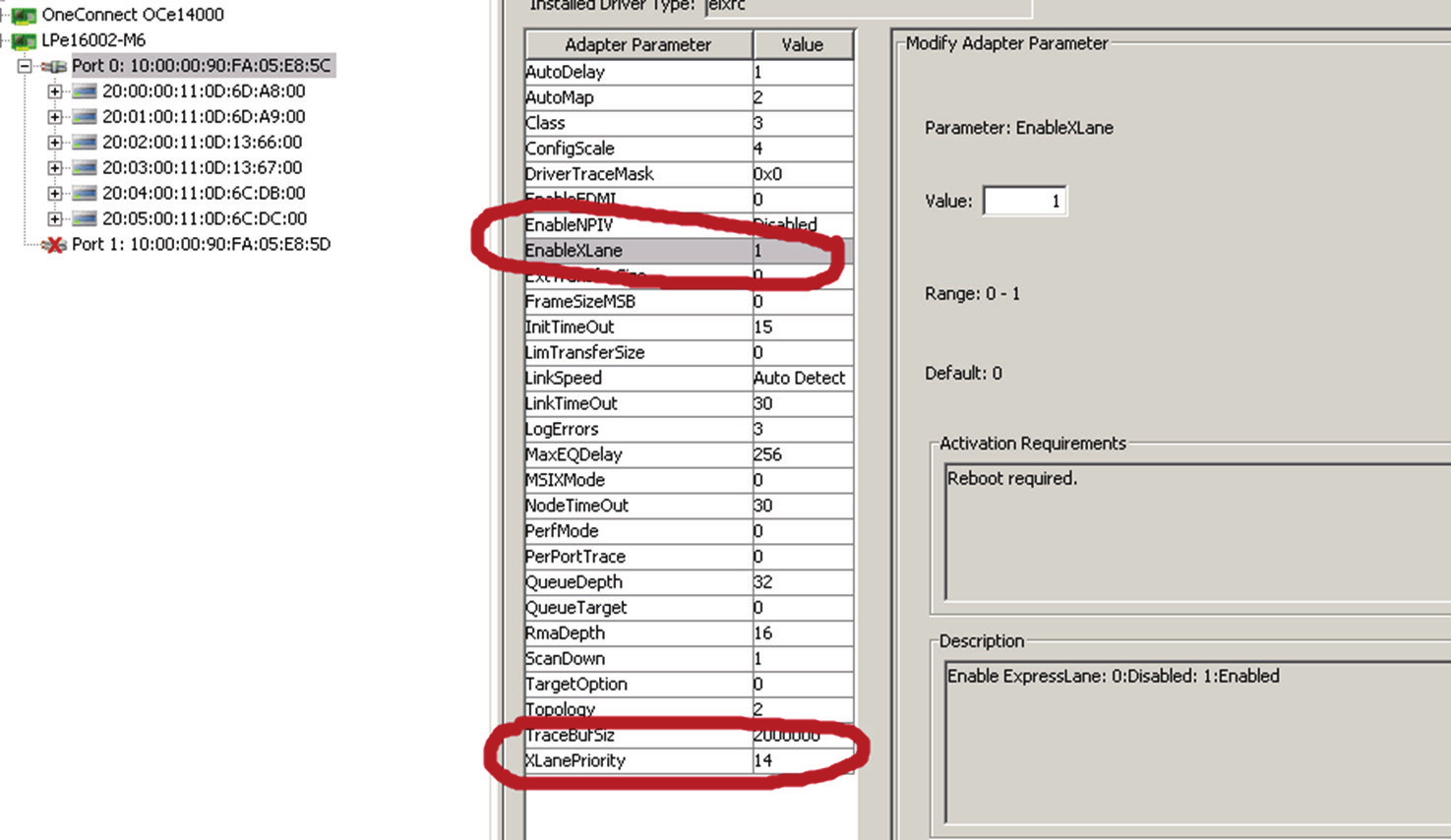

Lets have a look on how Emulex implements this.

Although Brocade supports the feature on both 8G (Condor 2 / Goldeneye 2) and 16G (Condor 3) platforms Emulex only has it available on their LPe16xxx adapters. Its a no-cost feature from both Brocade (if you run later FOS codes) and Emulex so it is really a no-brainer to explore the options.

By default the feature is disabled and you have to enable this via the Emulex One Command Manager (OCM). The feature is enable on a per-port basis which basically means you enable it and set a priority as shown below.

Just by setting the above two options actually does not do much. It enables the algorithm and sets the required memory structures to support the feature but that’s it. It’s like starting a car. Without shifting it into gear it won’t move.

Just by setting the above two options actually does not do much. It enables the algorithm and sets the required memory structures to support the feature but that’s it. It’s like starting a car. Without shifting it into gear it won’t move.

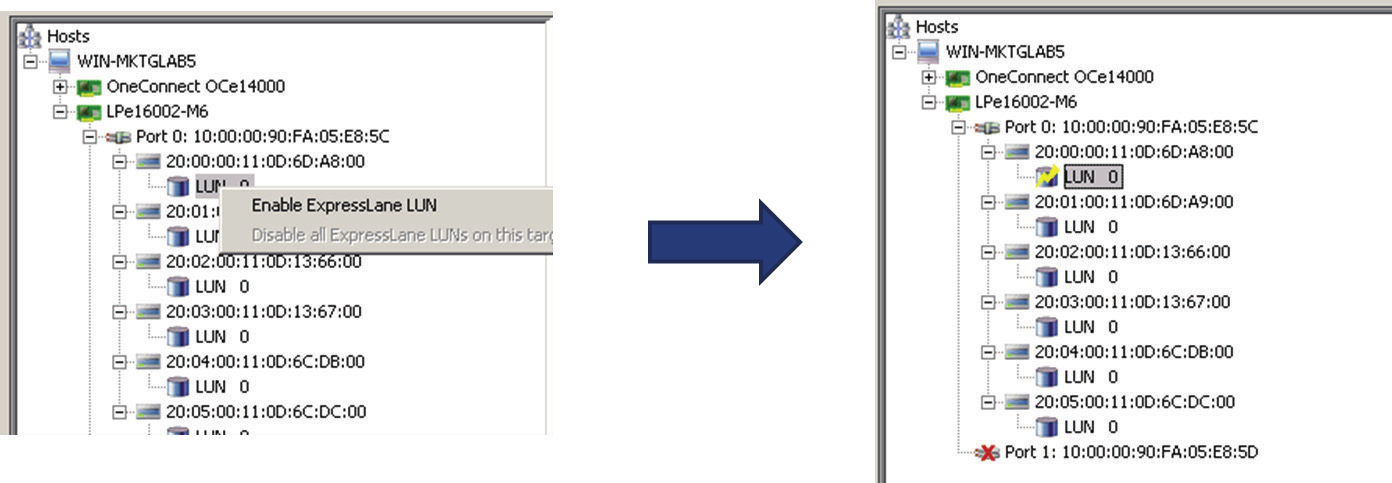

To really get ExpressLane to work you need to select the LUNs hanging off under the target ports and right-click on the respective LUN(s) and select “Enable ExpressLane LUN”.

The LUNs who have the feature activated will show with a lightning bolt icon.

The LUNs who have the feature activated will show with a lightning bolt icon.

Use cases

When you have it enabled on the HBA side you will notice an improved throughput primarily on WRITE IO’s. Simple reason is that the majority of data will flow from HBA to array. On READ IO’s it is the other way around however when the array does not support the CS_CTL priority feature these frames will not get tagged as such and thus the fabric does not have the ability to map these frames on the respective VC’s and thus you will not see an improvement here.

Assuming both sides do support this feature you can create environments whereby in a tiered infrastructure you enable LUN’s sitting on flash drives and leave the others off. An other scenario could be to have multiple HBA port connected tot he same array but within the same FC fabric. In that case you can differentiate with different priority values in order to obtain QoS from end-to-end.

An even better solution would be, in case of array support, to have the option to set different DSCP values on different LUN’s based upon certain policies which could then hook into dynamic tiering erc. however this is not available (yet).

Again start asking your vendor for support. From a programming perspective it’s not really difficult to achieve and the majority of the workload in case of prioritizing frames is handled by the, already existing, fabric technology anyway. Further futures may include a more dynamic end-to-end workload profiling which allows hosts, fabrics and storage to be set to appropriate levels.

Hope this explains the CS_CTL feature a bit.

Kind regards,

Erwin van Londen

Erwin, you say “based on the QOS zone mapping and if contention occurs the priorities of the frames being sent over the ISL is set to a 60%/30%/10% ratio. ” – is 60/30/10 ratio something what is hardcoded and not customizable? When we asked Brocade about how QOS internally works they never said about such ratio.

I thought this ratio is valuable always and QOSH zones traffic have priority on ISLs just because VCs 10-14 have dedicated 5 buffer credits each (when QOSL zones have only two of them and QOSM can borrow credits). Now I’m bit confused.

Hello Roman,

This is not new and has been in FOS for a long time. I’m curious why Brocade is so secretive on this. It is mentioned in their training material. Be aware this algorithm only kicks in when contention occurs and is fixed in the ASIC code. Although you have virtual channels the link itself remains a serial line and only one frame can be sent after the other. If you have multiple frames arriving at the switch from different originators which are mapped to traverse a particular ISL. The order in which they will be sent is depending on arrival however if each of these ingress buffers of the originators is filled up the order on which the frames will be sent onto this ISL is in a 60/30/10 ratio. So you will get 6 frames if the destination is in a QoSH zone, then 3 frames when it is in a QoSM zone and 1 frame on a QoSL zone. This is to prevent locking a higher QoS level any lower one and there will still be frames traversed on lower QoS values. If you have a very busy system that would be mapped on a QoSH zone it could potentially prevent all other traffic in QoSM and QoSL from being moved over an ISL. By FOS applying this ratio there is still a relatively fair queuing mechanism and shouldn’t cause issues on host. Obviously when you run in these situations it would be wise to start re-designing your infrastructure and increase the capacity on the ISL’s to accommodate for more traffic.

Hope this explains the situation.

Kind regards,

Erwin

Thanks Erwin. Now it’s more clear than it was before 🙂

Hello Erwin! Thanx a lot for this post. Just one question regarding CS_CTL values with Default mode. As I see ranges 1-8, 9-16 and 17-24 are not correspond to the number of VCs. How switch will interpret this? As kind of priorities? Thus will it allow the bigger percentage of High Priority VCs usage for frames with value 24 than for those with value 17?

Hello Erwin! Thanx a lot for this post. Just one question regarding CS_CTL values with Default mode. As I see ranges 1-8, 9-16 and 17-24 are not correspond to the number of VCs. How switch will interpret this?

Each of these values are mapped to the LOW, MED and HIGH VC groups in a round-robin fashion as far as I’ve been able to determine. Be aware that these values are defined in the RFC’s I mentioned. I wouldn’t be surprised if the QoS functionality from the CS_CTL field are extended to FCIP configurations where these DSCP values can be used to propagate these QoS priorities in the IP WAN networks.

Cheers

I extended my question with assumption of some kind of priority. This will win with CS_CTL not only from the fact that it works with the particular LUN, but have a much higher level of granularity in the distribution of traffic.

As opposed to the IP layer capabilities where there are more options to choose from obviously. On the Brocade side we’re restricted to 3 QoS levels hence why they grouped these values into 3 sections. There is no further prioritization within these groups.